Here we have taken the example of a target tensor with class indices. In this example, we compute the cross entropy loss between the input and target So, you may notice that you are getting different values of these tensors Example 1 Note − In the following examples, we are using random numbers to generate input and target tensors. Target = torch.empty(3, dtype = torch.long).random_(5)Ĭreate a criterion to measure the cross entropy loss.Ĭompute the cross entropy loss and print it. Make sure you have already installed it.Ĭreate the input and target tensors and print them. In all the following examples, the required Python library is torch. To compute the cross entropy loss, one could follow the steps given below The target tensor may contain class indices in the range of where C is the number of classes or the class probabilities. The input is expected to contain unnormalized scores for each class. CrossEntropyLoss() is very useful in training multiclass classification problems. The loss functions are used to optimize a deep neural network by minimizing the loss. It is a type of loss function provided by the torch.nn module. It creates a criterion that measures the cross entropy loss. > tensor(2.8824) tensor(2.8824) tensor(2.To compute the cross entropy loss between the input and target (predicted and actual) values, we apply the function CrossEntropyLoss().

We should get the same results: print(loss, ce, loss_manual) Loss_manual = -1 * torch.log(probs1).gather(1, target.unsqueeze(1))

Pytorch cross entropy loss manual#

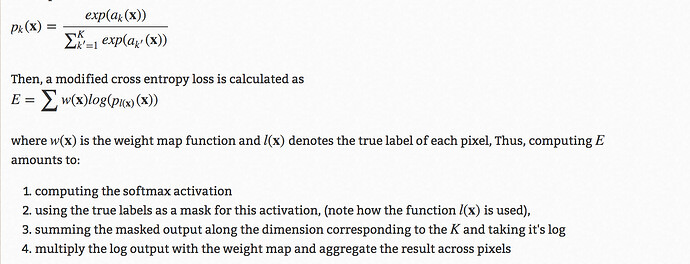

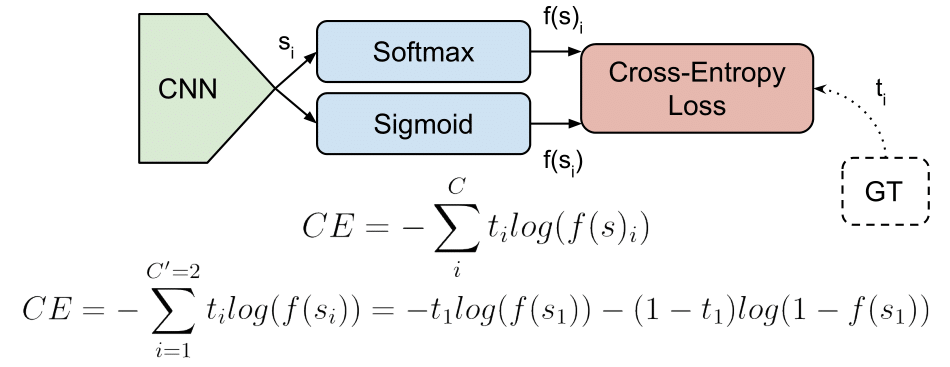

While the manual approach from the PyTorch docs would give you: # Using the formula from the docs One_hot = F.one_hot(target, num_classes = 3)Ĭe = (one_hot * torch.log(probs1 + 1e-7)) The manual approach from your formula would correspond to: # Manual approach using your formula Loss = criterion(torch.log(probs1), target) Given that you’ll get: criterion = nn.NLLLoss(reduction='sum') This approach is just to demonstrate the formula and shouldn’t be used, as torch.log(torch.softmax()) is less numerically stable than F.log_softmax.Īlso, the default reduction for the criteria in PyTorch will calculate the average over the observations, so lets use reduction='sum'. So, let’s change the criterion to nn.NLLLoss and apply the torch.log manually. Note that you are not using nn.CrossEntropyLoss correctly, as this criterion expects logits and will apply F.log_softmax internally, while probs already contains probabilities, as explained. How can I know if this loss is beign computed correctly? I am trying this example here using Cross Entropy Loss from PyTorch: probs1 = torch.tensor([[Įach pixel along the 3 channels corresponds to a probability distribution…there is a probability distribution for each position of the tensor…and the target has the classes for each distribution. I took some examples calculated using the expression above and executed it using the Cross Entropy Loss in PyTorch and the results were not the same. I was performing some tests here and result of the Cross Entropy Loss in PyTorch doesn’t match with the result using the expression below: Please, someone could explain the math under the hood of Cross Entropy Loss in PyTorch?

0 kommentar(er)

0 kommentar(er)